Inspired by Bret Victor

In his 2014 talk Seeing Spaces, Bret Victor envisioned an environment where technology becomes transparent, where you effortlessly see inside the minds of robots as you build them. This is the dream of everyone building computer vision for the physical world, and is at the core of what we're building at Rerun.

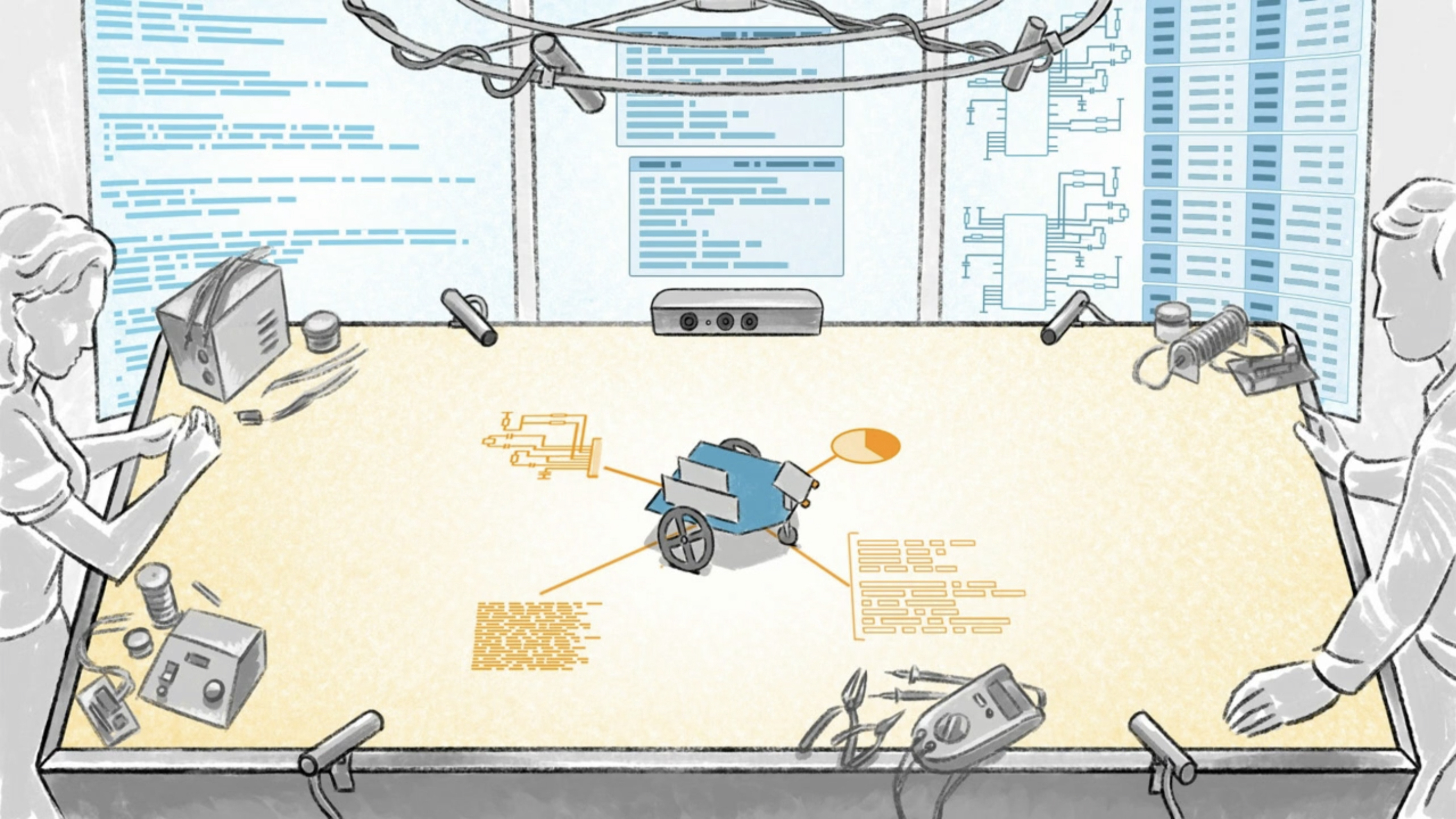

A depiction of what a Seeing Space might look like from Bret Victor’s talk Seeing Spaces

Like most interesting people Bret is hard to summarize, but you might say he’s a designer/engineer turned visionary/researcher that talks a lot about interfaces and tools for understanding. He's on a life-long mission to change how we think and communicate using computers.

You know a body of work is special when just taking a small aspect of it, potentially out of context, still produces great ideas. This is without a doubt true of Bret Victor's work, which has been the inspiration for Figma, Webflow, Our World in Data, and many others.

The overlooked inspiration behind Rerun the-overlooked-inspiration-behind-rerun

The best articulation I know of the need to see inside your systems, particularly those with a lot of internal complexity, comes from the talk Seeing Spaces. It’s seldom referenced but I keep going back to it and am always struck by how prescient it was back in 2014.

The full talk Seeing Spaces by Bret Victor.

The context of the talk is roughly the future of maker spaces. In it he makes two main points:

- For a growing number of projects with embedded intelligence (robotics, drones, etc), the main challenge isn’t putting them together, but understanding what they are doing and why. What you need here are seeing-tools and we don't really have many of those.

- If you’re really serious about seeing, you build a dedicated room (think NASA’s mission control room). We therefore need Seeing Spaces. These spaces would be shared rooms that embed all the seeing-tools you need, similar to how maker spaces have a shared set of manufacturing equipment.

NASA's Shuttle Control Room is built for serious seeing. Photo Credit: NASA

A physical space for seeing is interesting, and if you follow Bret’s work you can see the lineage from this, through The Humane Representation of Thought, to his current project Dynamicland. Whether or not creating a dedicated physical space is the right way to go, for most teams it’s not practical or the top priority. The first problem is getting “regular” software seeing-tools in place that make it easier to build and debug intelligent systems.

This is essentially what we are doing at Rerun. We are building software based seeing-tools for computer vision and robotics. For teams that want to go all the way to Seeing Spaces, the building blocks they need will all be there.

What is a seeing-tool? what-is-a-seeingtool

Seeing-tools help you see inside your systems, across time and across possibilities. Seeing inside your systems consists of extracting all relevant data, like sensor readings or internal algorithm state, transmitting it to the tool, and visualizing it. This should all be built in and require no additional effort.

![]()

A depiction of what seeing across time might look like from Bret Victor’s talk Seeing Spaces

Seeing across time means visualizing whole sequences, and making it possible to explore them by controlling time. These sequences could either take place in real world time, or in compute time like steps in an optimization. Seeing across possibilities means comparing sequences to each other, for example over different parameter settings. When training machine learning models, this is usually called experiment tracking.

In essence, a seeing tool is an environment that lets you move smoothly from live interactive data visualization to organizing and tracking experiments.

Principles for a computer vision focused seeing-tool principles-for-a-computer-vision-focused-seeingtool

Every team that builds computer vision for the physical world needs tools to visualize their data and algorithms, and currently most teams build custom tools in-house. Prior to Rerun, we've built such tools for robotics, autonomous driving, 3D-scanning, and augmented reality. We believe there are a couple of key principles we need to follow in order to build a true seeing-tool that can unlock progress for all of computer vision.

Separate visualization code from algorithm code separate-visualization-code-from-algorithm-code

It's tempting to write ad-hoc visualization code inline with your algorithm code. It requires no up-front investment; just use OpenCV to paint a picture, and show it with cv.imshow. However, this is a mistake because it makes your codebase hard to work with, and constrains what and where you can visualize.

If you instead keep your visualizations separate, it both keeps your codebase clean and opens up for more powerful analysis. It works for devices without screens and you can explore your systems holistically across time and different settings.

You can't predict all visualization needs up-front you-cant-predict-all-visualization-needs-upfront

For computer vision, visualization is deeply intertwined with understanding. As developers build new things, they will invariably need to visualize what they are doing in unforeseen ways. This means it needs to be easy to add new types of visualizations without having to modify the visualizer or the supporting data infrastructure. This means we need powerful and flexible primitives and easy ways to extend the tools.

When prototyping, a developer should for instance be able to extend a point cloud visualization with motion vectors without recompiling schemas or leaving their jupyter notebook.

The same visualizations from prototyping to production the-same-visualizations-from-prototyping-to-production

Algorithms tend to run in very different environments as they progress through prototyping to production. The first prototype code might be written in a Colab notebook while the production environment could be an embedded device on an underwater robot. Giving access to the same visualizations across these environments makes it easier to compare results and removes duplicated efforts.

The increased iteration speed this has can be profound. I've personally experienced the time needed to go from observed problem in production, to diagnosing and designing a solution, and finally deploying a fix, decreasing from days down to minutes.

Why do seeing-tools matter? why-do-seeingtools-matter

Seeing-tools are needed to effectively understand what we are building. They enable our work to span from tinkering to doing experimental science. It’s currently way too hard to build great computer vision based products for the physical world, largely due to the lack of these tools.

The recent progress in AI has increased the amount of people that work on AI powered products. As any practitioner in the field knows, the process of building these products is less like classic engineering, and more a mix of tinkering and experimental science. As we as a community start deploying a lot more computer vision and other AI in real world products, great seeing-tools will be what makes products succeed. At Rerun we made it our mission to increase the number of successful computer vision products in the physical world. And to get there we're building seeing-tools.

If you're interested in what we're building at Rerun, then join our waitlist or follow Rerun on Twitter.