Objectron

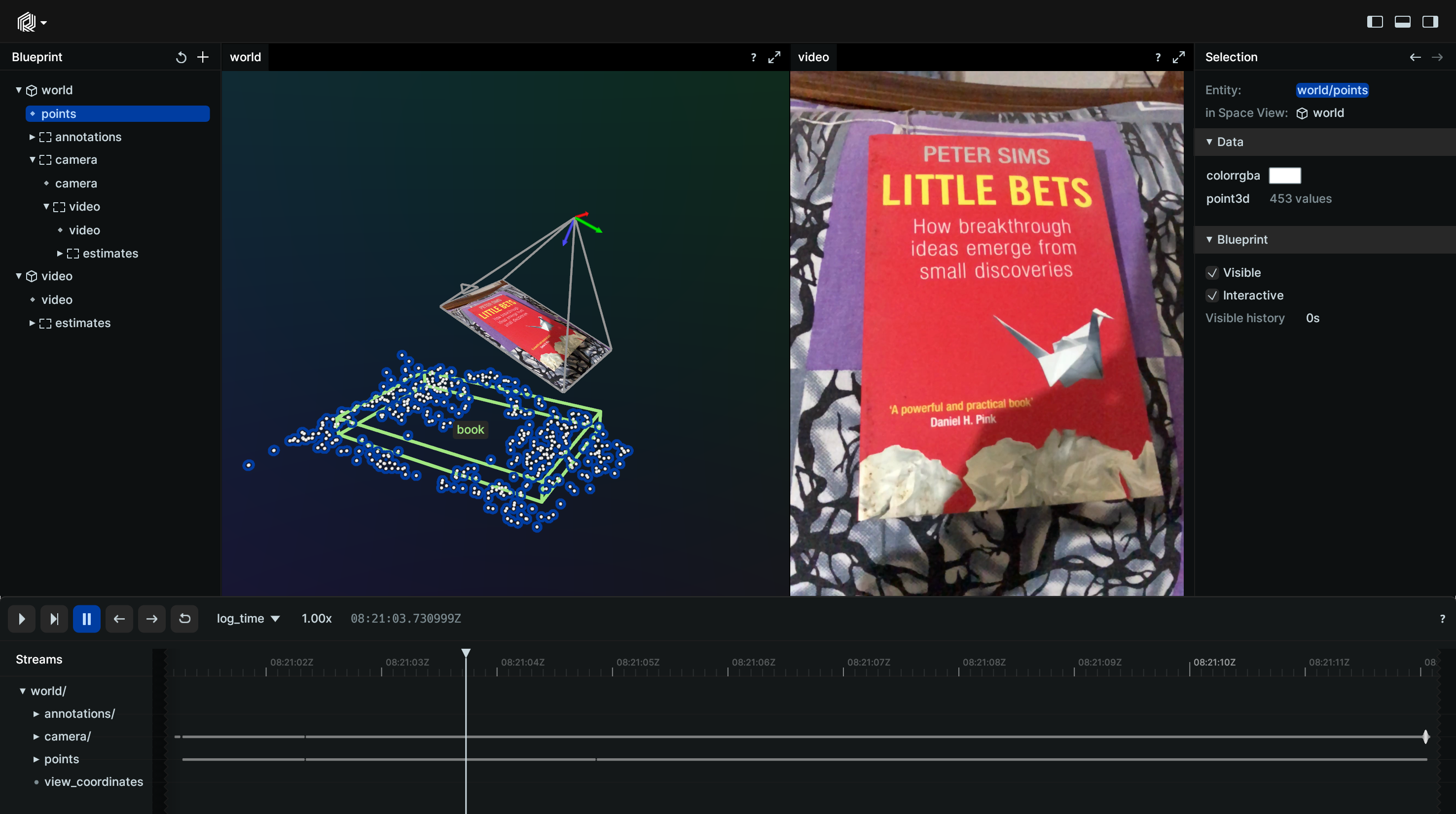

Visualize the Google Research Objectron dataset including camera poses, sparse point-clouds and surfaces characterization.

Used Rerun types used-rerun-types

Points3D, Boxes3D, EncodedImage, Transform3D, Pinhole

Background background

This example visualizes the Objectron database, a rich collection of object-centric video clips accompanied by AR session metadata. With high-resolution images, object pose, camera pose, point-cloud, and surface plane information available for each sample, the visualization offers a comprehensive view of the object from various angles. Additionally, the dataset provides manually annotated 3D bounding boxes, enabling precise object localization and orientation.

Logging and visualizing with Rerun logging-and-visualizing-with-rerun

The visualizations in this example were created with the following Rerun code:

Timelines timelines

For each processed frame, all data sent to Rerun is associated with the two timelines time and frame_idx.

rr.set_time("frame", sequence=sample.index)

rr.set_time("time", duration=sample.timestamp)Video video

Pinhole camera is utilized for achieving a 3D view and camera perspective through the use of the Pinhole and Transform3D archetypes.

rr.log(

"world/camera",

rr.Transform3D(translation=translation, rotation=rr.Quaternion(xyzw=rot.as_quat())),

)rr.log(

"world/camera",

rr.Pinhole(

resolution=[w, h],

image_from_camera=intrinsics,

camera_xyz=rr.ViewCoordinates.RDF,

),

)The input video is logged as a sequence of EncodedImage objects to the world/camera entity.

rr.log("world/camera", rr.EncodedImage(path=sample.image_path))Sparse point clouds sparse-point-clouds

Sparse point clouds from ARFrame are logged as Points3D archetype to the world/points entity.

rr.log("world/points", rr.Points3D(positions, colors=[255, 255, 255, 255]))Annotated bounding boxes annotated-bounding-boxes

Bounding boxes annotated from ARFrame are logged as Boxes3D, containing details such as object position, sizes, center and rotation.

rr.log(

f"world/annotations/box-{bbox.id}",

rr.Boxes3D(

half_sizes=0.5 * np.array(bbox.scale),

centers=bbox.translation,

rotations=rr.Quaternion(xyzw=rot.as_quat()),

colors=[160, 230, 130, 255],

labels=bbox.category,

),

static=True,

)Setting up the default blueprint setting-up-the-default-blueprint

The default blueprint is configured with the following code:

blueprint = rrb.Horizontal(

rrb.Spatial3DView(origin="/world", name="World"),

rrb.Spatial2DView(origin="/world/camera", name="Camera", contents=["/world/**"]),

)In particular, we want to reproject the points and the 3D annotation box in the 2D camera view corresponding to the pinhole logged at "/world/camera". This is achieved by setting the view's contents to the entire "/world/**" subtree, which include both the pinhole transform and the image data, as well as the point cloud and the 3D annotation box.

Run the code run-the-code

To run this example, make sure you have the required Python version, the Rerun repository checked out and the latest SDK installed:

pip install --upgrade rerun-sdk # install the latest Rerun SDK

git clone git@github.com:rerun-io/rerun.git # Clone the repository

cd rerun

git checkout latest # Check out the commit matching the latest SDK releaseInstall the necessary libraries specified in the requirements file:

pip install -e examples/python/objectronTo experiment with the provided example, simply execute the main Python script:

python -m objectron # run the exampleYou can specify the objectron recording:

python -m objectron --recording {bike,book,bottle,camera,cereal_box,chair,cup,laptop,shoe}If you wish to customize it, explore additional features, or save it use the CLI with the --help option for guidance:

python -m objectron --help