What is Rerun?

Rerun is a data platform for Physical AI that helps you understand and improve complex processes involving rich multimodal data like 2D, 3D, text, time series, and tensors.

It combines simple and flexible data logging with a powerful visualizer and query engine, designed specifically for domains like robotics, spatial computing, embodied AI, computer vision, simulation, and any system involving sensors and signals that evolve over time.

The problem the-problem

Building intelligent physical systems requires rapid iteration on both data and models. But teams often get stuck because:

- Data from sensors arrives at different rates and in different formats

- Understanding what went wrong requires visualizing multimodal data (images, point clouds, sensor readings) together in time

- Extracting, cleaning, and preparing data for training involves too many manual steps

- Switching between different tools for each step slows everything down

The best robotics teams minimize their time from new data to training. Rerun gives you the unified infrastructure to make that happen.

The Rerun Data Platform the-rerun-data-platform

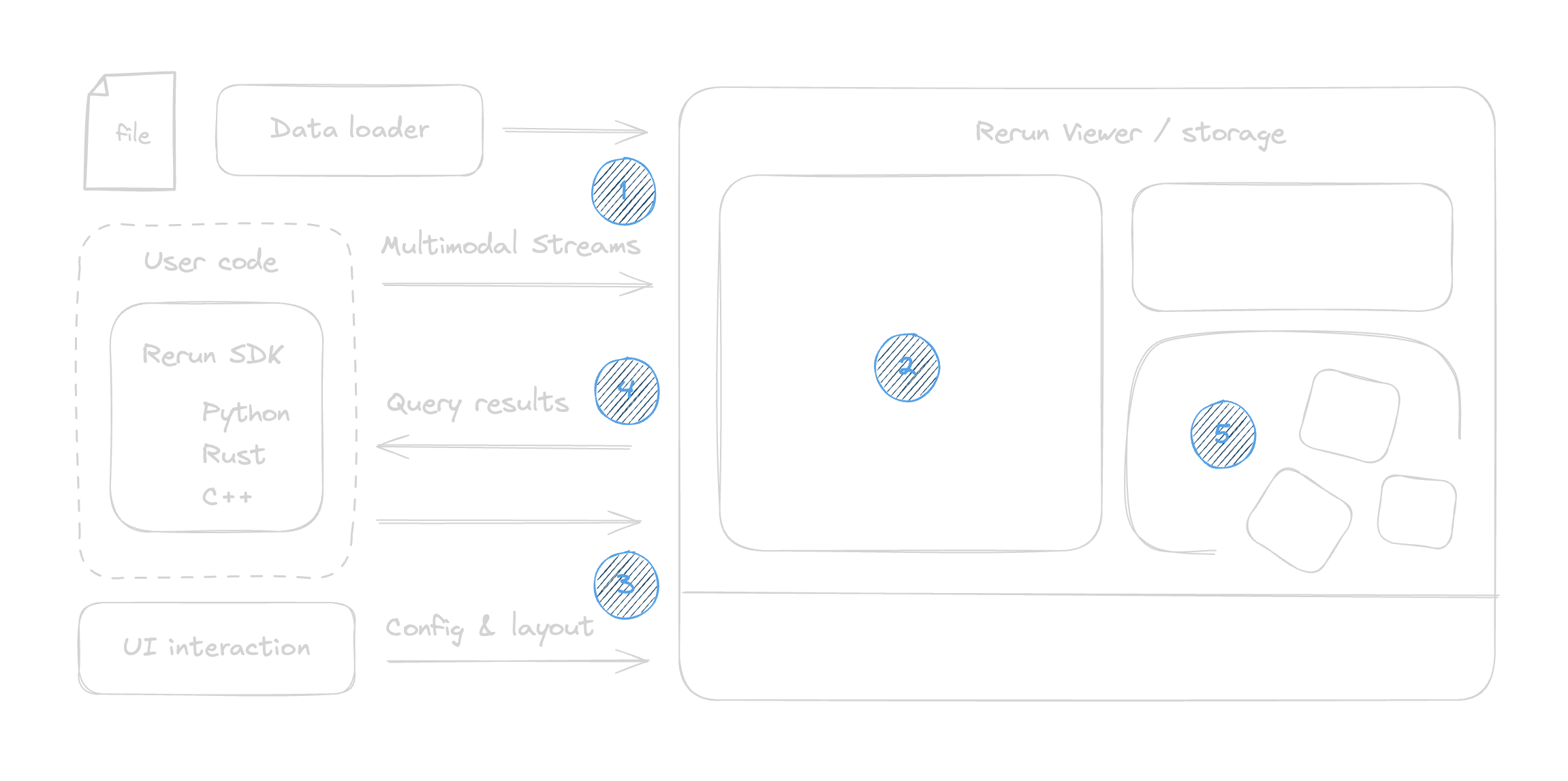

Rerun provides an integrated solution for working with multimodal temporal data:

Time-aware data model: At its core is an Entity Component System (ECS) designed for robotics and XR applications. This model understands both spatial relationships and temporal evolution, making it natural to work with sensor data, transforms, and time-series information.

Built-in visualization: A fast, embeddable visualizer lets you see your data as 3D scenes, images, plots, and text—all synchronized and explorable through time. Build layouts and customize visualizations interactively or programmatically.

Query and export: Extract clean dataframes for analysis in Pandas, Polars, or DuckDB. Use recordings to create datasets for training and evaluating your models.

Flexible ingestion: Load data from your code via the SDK, from storage formats like MCAP, or from proprietary log formats. Extend Rerun when you need custom types or visualizations.

Who is Rerun for? who-is-rerun-for

Rerun is built for teams developing intelligent physical systems:

- Robotics engineers debugging perception, controls, and planning

- Perception teams analyzing sensor data and model outputs

- ML engineers preparing datasets and understanding model behavior

- Autonomy teams developing and testing decision-making systems

If you're working with robots, drones, autonomous vehicles, spatial AI, or any system with sensors that evolve over time, Rerun helps you move faster.

What Rerun is not what-rerun-is-not

To set clear expectations:

- Not a training platform: Use Rerun with PyTorch, TensorFlow, JAX, etc. We prepare your data; you train your models.

- Not a deployment tool: Rerun helps you develop and understand your systems, not deploy them to production.

- Not a robot operating system: Rerun works with ROS, ROS2, or any other robotics stack.

- Not a general visualization tool: We're specialized for physical, multimodal, time-series data.

How do you use it? how-do-you-use-it

- Use the Rerun SDK to log multimodal data from your code or load it from storage

- View live or recorded data in the standalone viewer or embedded in your app

- Build layouts and customize visualizations interactively in the UI or through the SDK

- Query recordings to get clean dataframes into tools like Pandas, Polars, or DuckDB

- Extend Rerun when you need to

We also offer a commercial data platform for teams that need collaborative dataset management, version control, and cloud storage. Learn more.

Get started get-started

Ready to speed up your iteration cycle?

- Quick start guide - Get up and running in minutes

- Examples - See Rerun in action with real data

- Concepts - Learn how Rerun works under the hood

Can't find what you're looking for? cant-find-what-youre-looking-for

- Join us in the Rerun Community Discord

- Submit an issue in the Rerun GitHub project